Tip 2 - Information quality may decrease as companies cut back on trust and safety funding

Mass layoffs and removal of trust and safety rules may drop the quality of content on your feed

In recent years, social media companies across the board have cut back substantially on trust and safety measures including mass layoffs and the elimination of critical safety policies to protect users.

This has increased misleading information, hate speech and other problematic content:

Layoffs of over 40,000 employees across social media company have severely diminished Trust & Safety teams

There were over 40,000 layoffs across Meta, X (formerly Twitter) and Google:

Meta’s layoffs of 21,000 jobs “had an outsized effect on the company’s Trust & Safety work”

X (formerly Twitter) fired 80% of engineers dedicated to Trust and Safety following Elon Musk’s takeover

Google laid off one-third of a employees in a unit aiming to protect society from misinformation, radicalization, toxicity and censorship

Companies eliminated 17 critical policies which curbed hate speech, harassment and misinformation

Between November 2022 and November 2023, Meta, X (formerly Twitter) and Youtube “eliminated a total of 17 critical policies across their platforms”:

Meta now puts the responsibility on users to avoid content flagged as false by third-party fact checkers (see tip 4 to adjust your settings!)

X (formerly Twitter) disabled features to report election disinformation in the US*

X (formerly Twitter) began allowing political ads on their platform, and Meta relaxed transparency and labeling requirements on political ads

Supporting Research

Studies have documented the impacts of cuts to trust and safety at social media companies in recent years.

A report by Free Press, a civil society organization, details the impact of mass layoffs and cuts to critical safety policies at Meta (Formerly Facebook), X (formerly Twitter), and YouTube between November 2022 and November 2023

An investigation by researchers at NYU found that 90% of ads they tested on TikTok containing false and misleading election information evaded detection, despite the platform’s policy not allowing for political ads

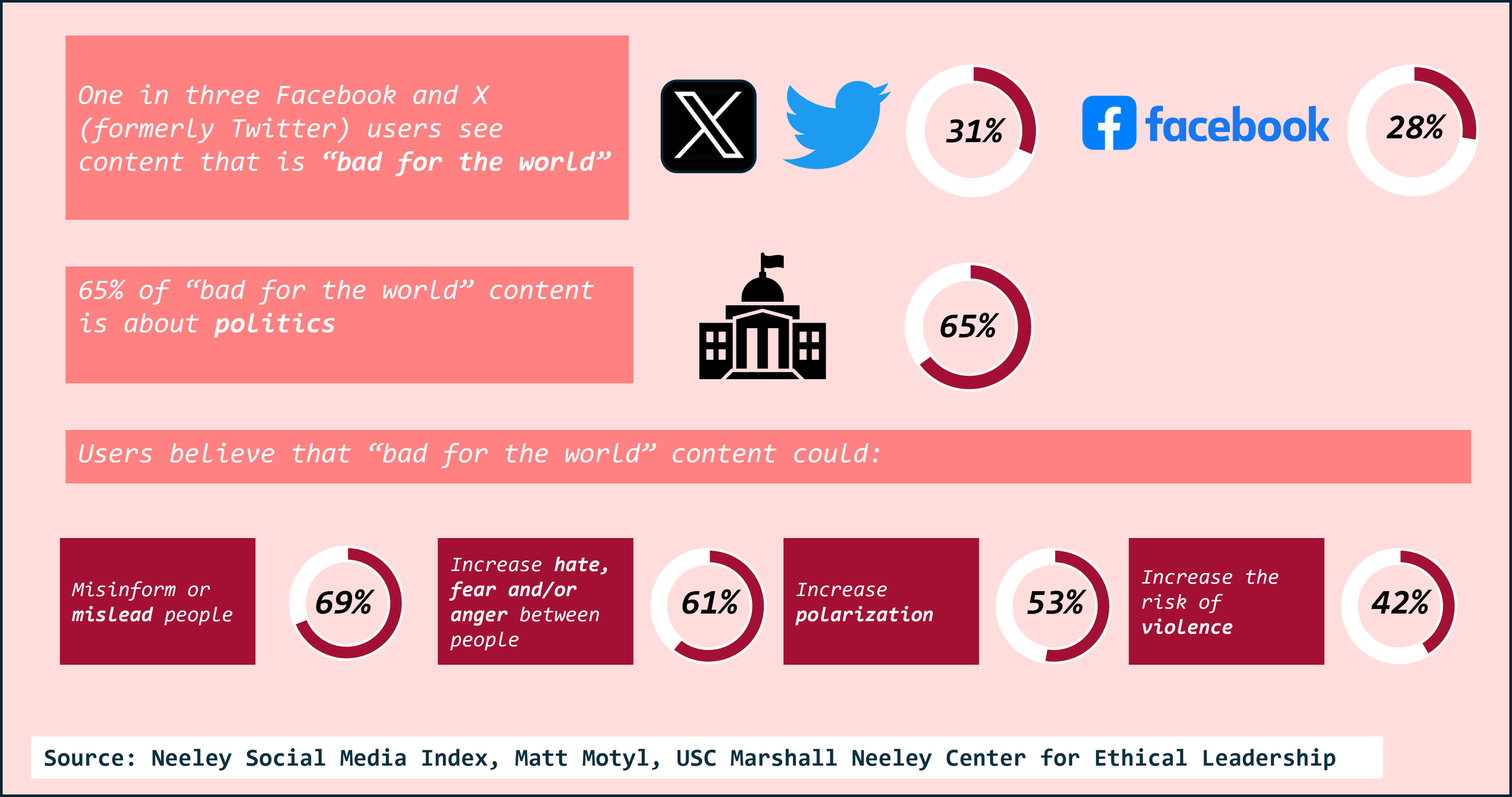

A survey by the Neely Center for Leadership and Ethical Decision Making at USC Marshall finds that one-third of Twitter and Facebook users come across harmful content on these platforms, with politics being the most frequent topic related to harmful content

*We note that though users in the US can no longer report disinformation, staff at government agencies such as the NASS can escalate false election information to X